-

[ Solved ] AMD Radeon 9070 XT Random Black Screen on Arch Linux

I have been an nVidia fan for quite a while(who wasn’t), until recently. There are quite some positive reviews on AMD Radeon cards on Linux, also the AMD’s Linux driver support has improved a lot. When I decided to upgrade from my good old nVidia RTX 3080, I thought I might just give AMD Radeon…

-

eGPU on Arch Linux – Not As Hard As I Expected

A few years ago, I bought a bulky nVidia RTX 3080 GPU as part of my venture into crypto mining, I also DIYed a thermal pad upgrade for it so it runs cooler and quieter. But since the PoS merge of Ethereum in 2022, it’s no longer mine-able using GPUs so my 3080 started to…

-

Arch Linux on Alienware M18R2 and What Works in 2025

As 2024 comes to its end, I decided to try Arch Linux on my new massive Alienware M18R2 laptop. The last time when I tried Arch Linux was years ago so I’d expect it’s much easier to install but probably not as easy as installing Ubuntu or Fedora Preparation To preserve the factory partition with…

-

Fixed an A2 Error on ASUS Rampage V Extreme

I have been a bit obsessed with PCs since my parents bought me my first PC, a 386DX with 4MB of memory, almost 30 years ago. Recently one of my friend was selling his crypto mining rig, and I bought the essential parts to start building a new server: I bought a new fan for…

-

Ubuntu Server 20.04 on ThinkPad W520

10 years ago, I would dream for a ThinkPad W520 laptop: imagine 4 cores, 8 threads, 32GB memory and 160GB SSD in 2012! I saw one of these old battleship-class laptops Gumtree the other day so I bought it without much hesitation – It’s still very good as a mini server even in 2022. In…

-

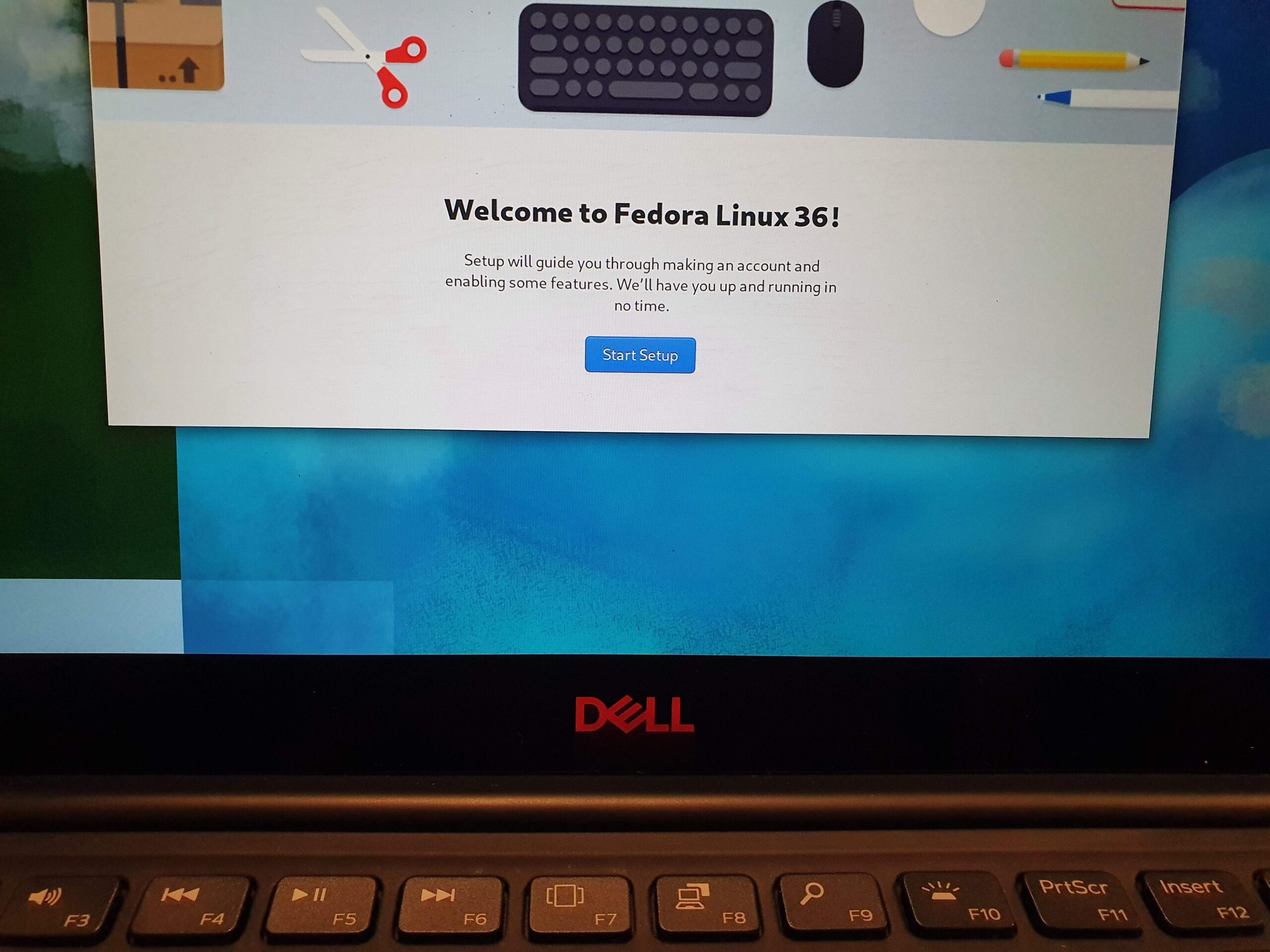

Installation of Fedora 36 on Dell XPS 13 9380

I happened to have acquired a used Dell XPS 13 9380, which is a nice little ultrabook with Intel 8th gen i7 CPU, 16GB memory(not upgrade-able), 512GB nvme SSD and a beautiful 13″ 4k screen. It has Windows 10 installed. Of course I have no intention to continue to use the stock Windows 10. At…

-

Mining Ethereum with Multiple AMD 6600 XT Cards on Ubuntu Linux

Warning: Ethereum(ETH) will migrate to PoS(Proof of Stake) algorithm in near future, maybe in a year. So jumping into ETH mining now might not be profitable. Also, I encourage crypto mining with renewable energy sources and a Tesla PowerWall 2 is just a few RTX 3090s away 🙂 Note: This is a follow-up for my…

-

My nVidia RTX 3080 ThermalRight Upgrade

Recently I traded my 2x Gigabyte RTX 3070 for a Aorus Masters RTX 3080 for a set of various reasons: For Ethereum crypto mining, a 3080 can achieve ~100MHps, which is very close to what 2x 3070 can do 1x 3080 definitely consumes less power than 2x 3070 If I play games or VR, only…