TL;DR: As of Aug 2022, there’s no out of box load balancing for Google Cloud SQL read replicas. So I built one for MySQL replicas with HAProxy with the ability to dynamically reload when number of replicas has changed.

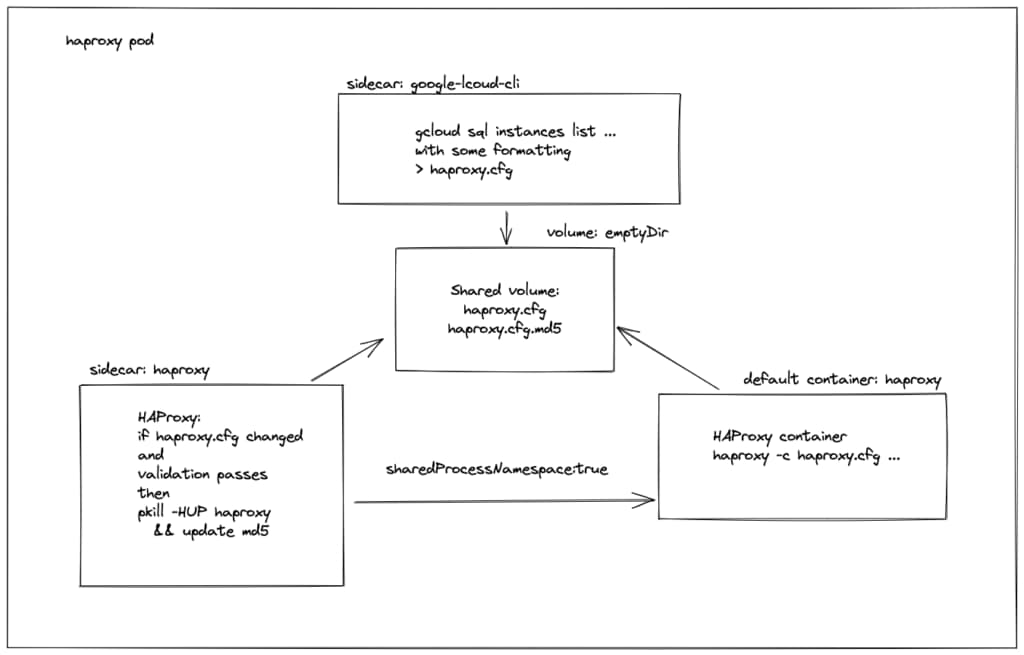

It will be quite straight forward to run a few HAProxy pods in Kubernetes as a service. To seamlessly reload HAProxy pods without dropping MySQL connections I came out with the design above, using 2 sidecars and a shared volume to do the job.

The gcloud sidecar

The official gcloud container image(gcr.io/google.com/cloudsdktool/google-cloud-cli) is used with the below entry-point script, to check replica instances and generate config file for HAProxy:

#!/bin/bash

# rather fail than to generate a broken config

set -eu

function generate_config {

# template for HAProxy config

cat <<EOF

global

stats socket /var/run/haproxy.sock mode 600 expose-fd listeners level user

defaults

mode tcp

timeout client 3600s

timeout connect 5s

timeout server 3600s

frontend stats

bind 0.0.0.0:8404

mode http

stats enable

stats uri /stats

stats refresh 10s

http-request use-service prometheus-exporter if { path /metrics }

frontend mysql

bind 0.0.0.0:3306

default_backend mysql_replicas

backend mysql_replicas

balance leastconn

EOF

# list cloud sql replicas and format the output as HAProxy backends

/google-cloud-sdk/bin/gcloud sql instances list -q \

--filter="labels.host-class=replica" \

--format='value(name, ipAddresses.ipAddress)' \

|while read instance_name instance_ip; do \

echo " server $instance_name $instance_ip:3306 check"

done

}

# a loop to check changes continously

while true; do

echo "Generating a haproxy config file..."

generate_config > $HAPROXY_CONFIG_FILE

echo "Waiting for $RELOAD_INTERVAL seconds..."

sleep $RELOAD_INTERVAL

doneThe HAProxy sidecar

I use the official HAProxy container image to check and validate configuration changes, and if all pass then reload the main HAProxy container. Here’s the entry-point script:

#!/bin/sh

# rather to let it fail than doing something strange

set -eu

md5_file=${HAPROXY_CONFIG_FILE}.md5

function test_config_file {

# if md5 checksum exists and it matche the config file

# then the config file is considered unchanged

if [ -f $md5_file ] && diff <(md5sum $HAPROXY_CONFIG_FILE) $md5_file; then

return 1

fi

# otherwise the config file has been updated and need to be validated

/usr/local/sbin/haproxy -f $HAPROXY_CONFIG_FILE -c

return $?

}

while true; do

if test_config_file; then

echo "Configuration has been updated and validated..."

# update the checksum

md5sum $HAPROXY_CONFIG_FILE > $md5_file

echo "Reloading the HAProxy..."

pkill -HUP haproxy

fi

echo "Waiting for $RELOAD_INTERVAL seconds..."

sleep $RELOAD_INTERVAL

done

All together

note: I didn’t include the configMap containing the 2 scripts above, nor the service and service account resources.

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: cloudsql-haproxy

name: cloudsql-haproxy

namespace: cloudsql

spec:

replicas: 2

selector:

matchLabels:

app: cloudsql-haproxy

template:

metadata:

annotations:

# for prometheus scraping

prometheus.io/port: "9102"

prometheus.io/scrape: "true"

labels:

app: cloudsql-haproxy

spec:

containers:

- image: haproxy:2.6.2-alpine

name: cloudsql-haproxy

ports:

- containerPort: 3306

name: mysql

protocol: TCP

- containerPort: 8404

name: stats

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /stats

port: 8404

scheme: HTTP

initialDelaySeconds: 5

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources:

requests:

cpu: 500m

memory: 200Mi

volumeMounts:

- mountPath: /usr/local/etc/haproxy

name: config-dir

- command:

- /usr/local/bin/haproxy/config-gen-loop.sh

env:

- name: HAPROXY_CONFIG_FILE

value: /usr/local/etc/haproxy/haproxy.cfg

- name: RELOAD_INTERVAL

value: "300"

image: gcr.io/google.com/cloudsdktool/google-cloud-cli:397.0.0-alpine

name: gcloud

resources:

requests:

cpu: 100m

memory: 100Mi

volumeMounts:

- mountPath: /usr/local/etc/haproxy

name: config-dir

- mountPath: /usr/local/bin/haproxy

name: haproxy-scripts

- command:

- /usr/local/bin/haproxy/config-reloader-loop.sh

env:

- name: HAPROXY_CONFIG_FILE

value: /usr/local/etc/haproxy/haproxy.cfg

- name: RELOAD_INTERVAL

value: "20"

image: haproxy:2.6.2-alpine

name: reloader

resources:

requests:

cpu: 100m

memory: 100Mi

volumeMounts:

- mountPath: /usr/local/etc/haproxy

name: config-dir

- mountPath: /usr/local/bin/haproxy

name: haproxy-scripts

# gcloud container runs as root by default, so it's easier to let haproxy run as root as well

securityContext:

runAsUser: 0

# this service account needs the cloudsql.viewer IAM role

serviceAccount: haproxy

serviceAccountName: haproxy

# important line to allow inter-container process signaling

shareProcessNamespace: true

volumes:

- configMap:

# ensure the scripts are executable

defaultMode: 0550

name: haproxy-scripts

name: haproxy-scripts

- emptyDir: {}

name: config-dir🙂